The Ambrosinus-Toolkit v1.1.9 has been implemented with a new feature: DPT technology thanks to René Ranftl’s Intel research team. It is another AI tool that brings artificial intelligence power inside the Grasshopper platform.

Dense Prediction Transformers (DPT) are a deep learning architecture utilized in computer vision applications like semantic segmentation, object detection, and instance segmentation. The fundamental concept of DPT involves generating dense image labels through the incorporation of global and local context information. An extensive explanation of Ambrosinus-Toolkit and the integration of the AI Diffuse Models in the AEC creative design processes will be discussed as an official research document so, stay tuned! 😉

WYSIWYT stand for: “What You See Is What You Text” because of DPT technology the designer sees in his favourite 3D modelling environment exactly what he has texted as input.

Now let’s jump to installation requirements and to the main features.

Requirements

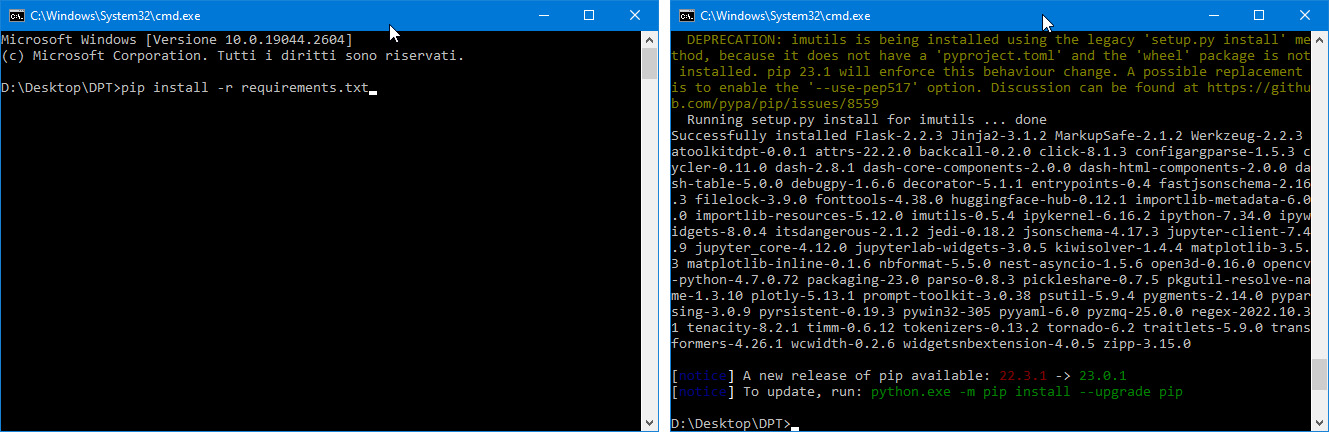

In order to run the DPTto3D component (subcategory AI) some python libraries are necessary as the other AI tools, for this reason, I have shared a “requirements.txt” file allowing the designer in this step in a unique command line from cmd.exe (Windows OS side). After downloading the file to a custom folder (I suggest in C:/CustomFolder or something like that) run the following command from cmd.exe after logging in the “CustomFolder”: pip install -r requirements.txt and wait till you see the start prompt string (see the image below on the right).

This procedure currently installs all the python libraries required from Ambrosinus-Toolkit, which are:

In particular, I have created the atoolkitdpt python library in order to run DPT estimation through the MiDaS pre-trained dataset shared by Intel researchers inside Grasshopper. For this reason, this python package is available directly from the PyPI repository site at this link. Anyway, all future updates will be publicly notified on my GitHub page AToolkitDpt. Fundamental is downloading at least one of the 8 weights models shared by Intel researchers. These are pre-trained datasets (size range 80MB to 1.5GB) able to generate depth maps (see the GitHub page aforementioned for details). Finally, the GH CPython is still necessary for running the “DPTto3D” component properly, as for all the other AI tools.

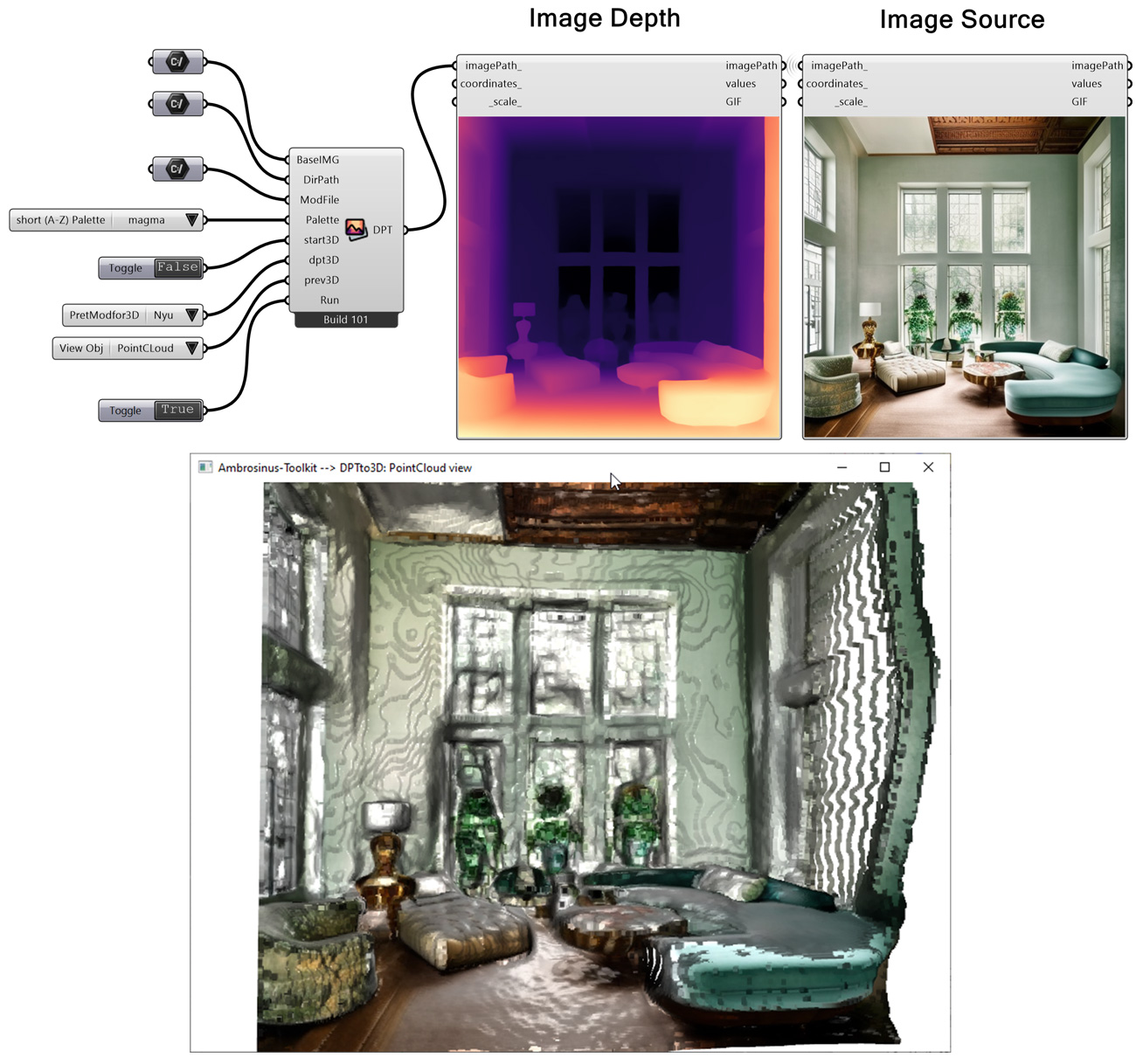

Parameters

- BaseIMG: the image source file;

- DirPath: the folder where all files generated will be stored;

- ModFile: the dataset file (format .pt) selected in order to run the DPT process, more info at GitHub page AToolkitDpt;

- Palette: from 0 to 166 you can select all the Matplotlib palettes; 167 and 168 are ATk-Gray and ATk-Gray_r (reverse) special gray palette (see full image);

- start3D: if True generates the PLY file with all the PointCloud estimated coordinates;

- dpt3D: if start3D is true the user needs to select the NYU dataset or the KITTI one (for interior design the first one gives better results);

- prev3D: will open a viewport with an estimated preview of the PointCloud or of the 3D Mesh object

- Run: runs this component

Main features

The DPTto3D component through DPT technology can estimate the depth map information directly from a 2D RGB image. From this map, it can predict a PointCloud that then is stored in a PLY file format. The latter is known as Stanford Triangle Format (or Polygon File Format), I opted for the ASCII encoding so the designer can clearly read all the geometric info from it and then manipulates them within Grasshopper/Rhino or even MeshLab. Summarizing the component can:

- generates the monocular depth map estimation from 8 different types of weights models (pre-trained Dataset provided by Intel researchers)

- generates a PointCloud and in some specific cases a 3D object in the mesh format (shown to the designer in a preview window)

- generate a depth map (in the 3D case) from NYU or KITTI weights models (especially NYU is suitable for the interior images)

- generates depth maps with different Matlabplot palettes (gray, inferno, magma, rainbow, viridis and many others including the reversed gradients)

- stores in that PLY file format the 3D coordinates of the PointCloud

Nonetheless, I suggest evaluating the source image and then taking into account manipulating the PointCloud by appropriate software or by extra Grasshopper definition. Bear in mind that this procedure is really experimentation so the final mesh could be generated not very often similar to the source image (it works better for indoor images, for now), on the other hand, PointCloud is a good starting point. Each time the designer selects the 3D feature the DPTto3D component will use the NYU or KITTI datasets to generate the depth map.

Below is a 3D view of another interior tested image:

|

|

The video below shows different highlights like DPTto3D outcomes (all files generated), but also some Rhino7 and Rhino8 visualization. Have a look!

Video demo

Download this component from HERE